I'm going to provide you with two ways on how to analyze dump files, an old method I used years and years ago and the new method using the new Debugging Tools for Windows.

The old method using dumpchk and pstat

I have only used this method windows server 2000 and it was many years ago. I do not know if it will work on the new operating systems such as server 2008 or windows 7!

Before we proceed you can get a copy of dumpchk.exe from the Windows 2003 Support Tools which can be downloaded from here:

http://www.microsoft.com/downloads/details.aspx?FamilyId=6EC50B78-8BE1-4E81-B3BE-4E7AC4F0912D&displaylang=en

pstat.exe can be downloaded from the Windows 2000 Resource kit found here:

http://support.microsoft.com/kb/927229

First run dumpchk.exe against your dump file. It can be handy piping this to a text file so you can read it easier.

dumpchk.exe C:\WINDOWS\Minidump\Mini102609-01.dmp > c:\dumpchkresults.txt

From the data gathered in the text file your looking specifically for the "ExceptionAddress".

MachineImageType i386

NumberProcessors 1

BugCheckCode 0xc000021a

BugCheckParameter1 0xe1270188

BugCheckParameter2 0x00000001

BugCheckParameter3 0x00000000

BugCheckParameter4 0x00000000

ExceptionCode 0x80000003

ExceptionFlags 0x00000001

ExceptionAddress 0x8014fb84

In this instance the ExceptionAdderss is 0x8014fb84.

The next step is boot the server up and run pstat.exe against it. Again it is good to pipe the results to a text file. What pstat shows us is all the drivers and what memory ranges they are using.

MODULENAME Load Addr Code Data Paged LinkDate

----------------------------------------------------------------------

Ntoskrnl.exe 80100000 270272 40064 434816 Sun May 11 00:10:39 1997

Hal.dll 80010000 20384 2720 9344 Mon Mar 10 16:39:20 1997

Aic78xx.sys 80001000 20512 2272 0 Sat Apr 05 21:16:21 1997

Scsiport.sys 801d7000 9824 32 15552 Mon Mar 10 16:42:27 1997

Disk.sys 80008000 3328 0 7072 Thu Apr 24 22:27:46 1997

Class2.sys 8000c000 7040 0 1632 Thu Apr 24 22:23:43 1997

Ino_flpy.sys 801df000 9152 1472 2080 Tue May 26 18:21:40 1998

Ntfs.sys 801e3000 68160 5408 269632 Thu Apr 17 22:02:31 1997

Floppy.sys f7290000 1088 672 7968 Wed Jul 17 00:31:09 1996

Cdrom.sys f72a0000 12608 32 3072 Wed Jul 17 00:31:29 1996

Cdaudio.sys f72b8000 960 0 14912 Mon Mar 17 18:21:15 1997

Null.sys f75c9000 0 0 288 Wed Jul 17 00:31:21 1996

Ksecdd.sys f7464000 1280 224 3456 Wed Jul 17 20:34:19 1996

Beep.sys f75ca000 1184 0 0 Wed Apr 23 15:19:43 1997

Cs32ba11.sys fcd1a000 52384 45344 14592 Wed Mar 12 17:22:33 1997

Msi8042.sys f7000000 20192 1536 0 Mon Mar 23 22:46:22 1998

Mouclass.sys f7470000 1984 0 0 Mon Mar 10 16:43:11 1997

Kbdclass.sys f7478000 1952 0 0 Wed Jul 17 00:31:16 1996

Videoprt.sys f72d8000 2080 128 11296 Mon Mar 10 16:41:37 1997

Ati.sys f7010000 960 9824 48768 Fri Dec 12 15:20:37 1997

Vga.sys f7488000 128 32 10784 Wed Jul 17 00:30:37 1996

Msfs.sys f7308000 864 32 15328 Mon Mar 10 16:45:01 1997

Npfs.sys f7020000 6560 192 22624 Mon Mar 10 16:44:48 1997

Ndis.sys fccda000 11744 704 96768 Thu Apr 17 22:19:45 1997

Win32k.sys a0000000 1162624 40064 0 Fri Apr 25 21:17:32 1997

Ati.dll fccba000 106176 17024 0 Fri Dec 12 15:20:08 1997

Cdfs.sys f7050000 5088 608 45984 Mon Mar 10 16:57:04 1997

Ino_fltr.sys fc42f000 29120 38176 1888 Tue Jun 02 16:33:05 1998

Tdi.sys fc4a2000 4480 96 288 Wed Jul 17 00:39:08 1996

Tcpip.sys fc40b000 108128 7008 10176 Fri May 09 17:02:39 1997

Netbt.sys fc3ee000 79808 1216 23872 Sat Apr 26 21:00:42 1997

El90x.sys f7320000 24576 1536 0 Wed Jun 26 20:04:31 1996

Afd.sys f70d0000 1696 928 48672 Thu Apr 10 15:09:17 1997

Netbios.sys f7280000 13280 224 10720 Mon Mar 10 16:56:01 1997

Parport.sys f7460000 3424 32 0 Wed Jul 17 00:31:23 1996

Parallel.sys f746c000 7904 32 0 Wed Jul 17 00:31:23 1996

Parvdm.sys f7552000 1312 32 0 Wed Jul 17 00:31:25 1996

Serial.sys f7120000 2560 0 18784 Mon Mar 10 16:44:11 1997

Rdr.sys fc385000 13472 1984 219104 Wed Mar 26 14:22:36 1997

Mup.sys fc374000 2208 6752 48864 Mon Mar 10 16:57:09 1997

Srv.sys fc24a000 42848 7488 163680 Fri Apr 25 13:59:31 1997

Pscript.dll f9ec3000 0 0 0

Fastfat.sys f9e00000 6720 672 114368 Mon Apr 21 16:50:22 1997

Ntdll.dll 77f60000 237568 20480 0 Fri Apr 11 16:38:50 1997

---------------------------------------------------------------------

Total 2377632 255040 1696384

With this information you can then get the ExceptionAddress and find out which memory range it fits into using the data provided by pstat.exe. This is just an example but in this case the crash was caused by Ntoskrnl.exe. Microsoft wrote a KB article documenting this procedure:

http://support.microsoft.com/kb/192463

The new method using Debugging Tools for Windows

The recommended way for analyzing dump files is using Debugging Tools for Windows. This is not a tool, it is a toolkit containing a wide variety of diagnostic tools. There is a 32bit and 64bit version of the product, install the correct one depending on what platform your system is running.

As of this writing the latest version is 6.11.1.404.

Download the 32bit version from here:

http://www.microsoft.com/whdc/devtools/debugging/installx86.Mspx

Download the 64bit version from here:

http://www.microsoft.com/WHDC/DEVTOOLS/DEBUGGING/INSTALL64BIT.MSPX

Again the Debugging Tools are very complicated - below I am only going to show you the basic steps on how to find out what caused your system to crash. Lets begin.

Before you can analyse a dump file you first need symbol files. Symbol files contain symbolic information such as function names and data variable names and are created when an application is built. Symbol files have a .dbg or .pdb extension. These files are used by various debuggers from different vendors including Debugging Tools for Windows which we are going to use below. Without these files call stacks which show how functions are called would be inaccurate or incorrect causing function names from being omitted from the call stack. Only Microsoft can provide symbol files for the Microsoft core components such as kernel32.dll, ntdll.dll, user32.dll and other core windows components as Microsoft are the ones that developed these. Microsoft also provides symbol files for many other third party applications and drivers.

For more about Symbol files see KB311503 - this is more of a developers thing.

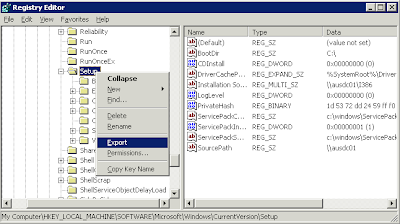

If you have some idea into what is causing the blue screen you can download the symbol files for just a few files which you want to analyse. However in this case we have no idea what is causing the blue screen so I want to download all symbol files for each of my drivers and windows application files in C:\Windows\System32.

To download all the symbol files for c:\windows\system32 use the synchk.exe tool which comes as part of the Debugging Tools. Use the /r switch which means perform a recursive query. In my example I am placing the symbol files in C:\symbols.

Run the following command:

symchk /r c:\windows\system32 /s SRV*c:\symbols\*http://msdl.microsoft.com/download/symbols

It will give you out put similar to this:

Note it is saying FAILED because it cannot find the symbol file in c:\symbols which is normal. If it cannot find the file, it goes and downloads it. Note this will take a while - for my server it took just over an hour to download all the symbol files ending up to be 558 MB of data.

You will now have symbol files for all the different drivers and application libaries in your system32 directory.

You also need a copy of the i386 directory from the windows CD to analyze the dump. Ensure this is the same service pack as the system you are running. I'm using Windows Server 2003 SP2 so I had to track down a Windows Server 2003 SP2 CD.

Now that you have the symbol files and the i386 directory it is now possible to analyse the dump.

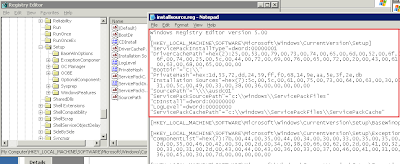

Use the following command from Microsoft KB 315263:

windbg -y SymbolPath -i ImagePath -z DumpFilePath

In my case I used:

windbg -y c:\symbols -i D:\i386 -z C:\WINDOWS\Minidump\Mini123009-01.dmp

This comes up and tells me what file triggered the crash:

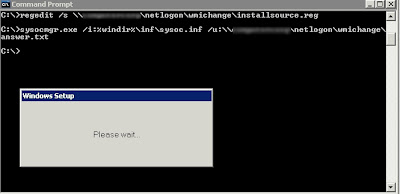

Now that I know bxnd52x.sys caused the crash I now need to link it to a driver. As I described in my article "Permently Remove Driver" all drivers are referenced OEM*.INF files in c:\windows\inf. To find out which driver caused the crash search all files in C:\Windows\inf for any that contain the text string "bxnd52x.sys". You can do this using the following command:

find /c "bxnd52x.sys" c:\windows\inf\*.inf | find ":" | find /v ": 0"

This came back with two INF files containing this string.

If we open these files up we can see it is the broadcom network driver!

Note I have two because I have already updated the driver to see if that would fix the problem which it didn't. See how one was version 2.8.13 and the new one is version 5.0.13. The old drivers still stay in place for the "roll back driver functionality" in device manager. The fact that upgrading the driver did not fix the problem means that the problem is with the broadcom network adapter itself. This network adapter is onboard so it looks like im going to have to contact HP and arrange for a new mainboard.

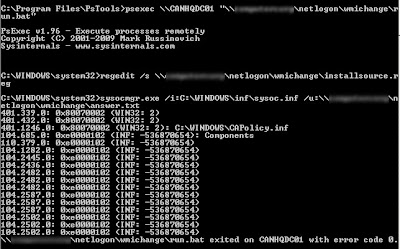

One more thing I would like to point out is in windbg.exe you can enter additional commands to find more information as described on KB315263.

The !analyze -v command displays verbose output.

This comes back and shows you a few pages of information on how it determined that bxnd52x.sys caused the issue. Most of this is beyond me but its good to know as Microsoft or a vendor could request for this information.

There are lots of other commands for finding more information. For example the command "lm N T" can give you all the drivers and modules running on the system at the time of the crash.

I hope you have learnt something out of this. As always I'm always looking forward to feedback so please leave me a comment or shoot me an email to clint@kbomb.com.au.